The hidden complexities of transactions

Transactions can be deceptive in their apparent simplicity. While they are crucial for ensuring data integrity, they can also become a minefield of complexity, especially when it involves external elements like third-party APIs, email services, or other networked communications. These complexities are frequently overlooked, making it all the more important to approach transactions with caution.

A real-world scenario: A bank transfer

Consider a simple bank transfer system where a transfer requires debiting one account, crediting another, and then sending a confirmation email to the user.

The following could be one possible implementation that aims to make the whole process transactional.

try {

begin_transaction() // Database operation

debit_source_account() // Database operation

credit_destination_account() // Database operation

send_email_notification() // Non-database operation

commit_transaction() // Database operation

} catch (error) {

rollback_transaction() // Database operation

}

Although this looks very clean with good intentions, unfortunately, it has a fundamental flaw in the following line:

send_email_notification() // 💣 💣 💣 💣 💣

Blocking, a domino effect...

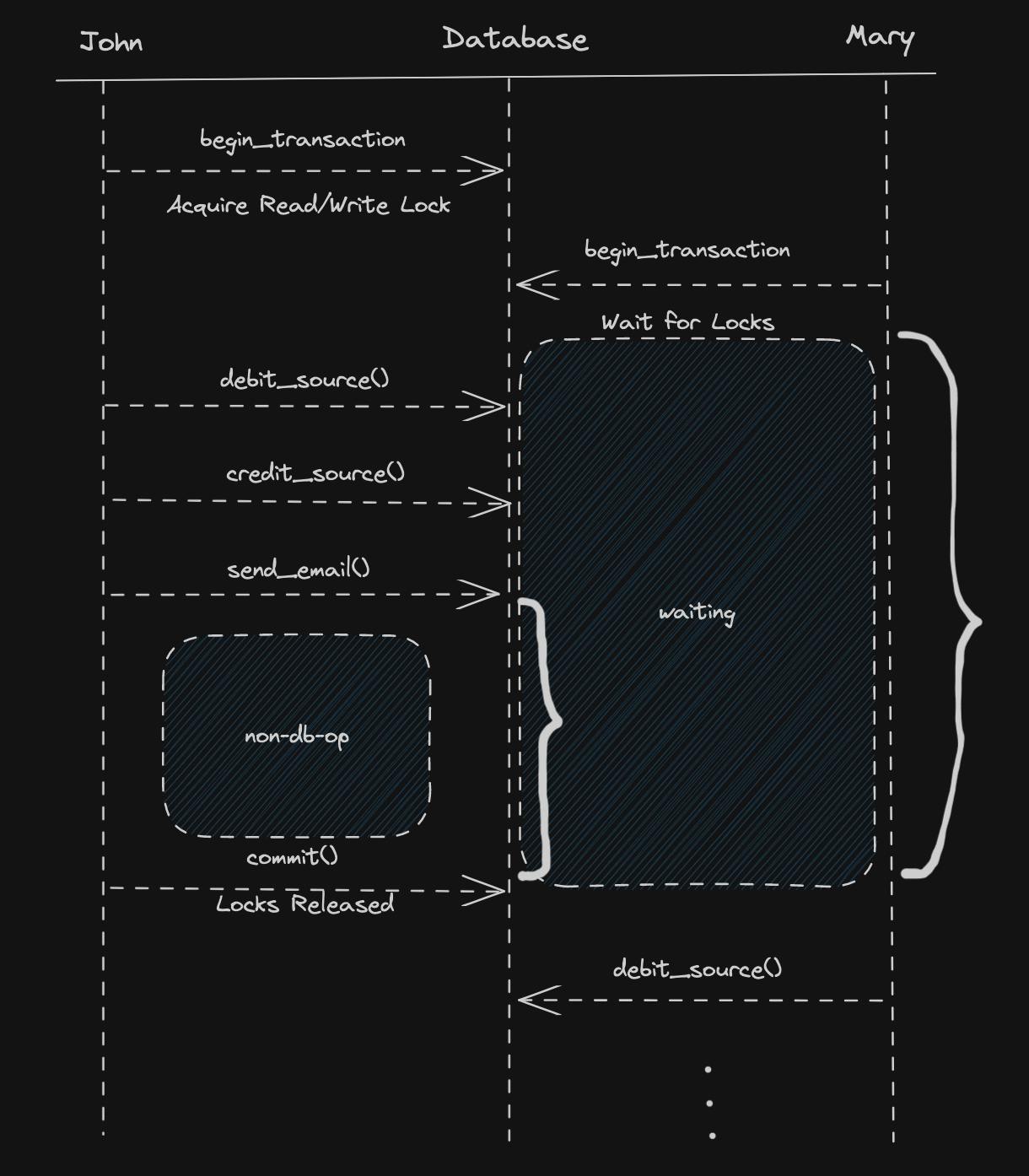

When a transaction starts, it acquires a database connection from the connection pool. This connection is then "locked" to that transaction until it either commits or rolls back. No other transaction can use that particular connection while it's being used.

This can result in one of the following scenarios:

Resource Contention: If a transaction is taking a long time to complete, it continues to hold the database connection. Other transactions that need to use the database are then queued up, waiting for available connections.

Deadlocks: In some cases, one transaction might be waiting for a resource (row or table) locked by a second transaction, while the second is waiting for a resource locked by the first. This creates a deadlock where neither can proceed.

Connection Pool Exhaustion: Most databases have a maximum number of simultaneous connections they can handle. If transactions are not released promptly, the number of available connections in the pool can reach zero, at which point new transactions cannot start until others complete, significantly slowing down the entire system.

The diagram shows that slow response times from the email server prolong transaction times, leading to increased wait times for other transactions. In environments with high concurrency, this can cause a domino effect, obstructing concurrent operations and reducing connection availability.

This issue transcends email servers; it affects HTTP Requests and all non-database I/O operations, which typically add latency —often tens of milliseconds— to the transaction times. That's why, it's a bad practice to make them part of database transactions and should be avoided.

Optimistic Locking, an alternative

The behavior described above belongs to the default transaction strategy: Pesimistic Locking, where locks happen at the transaction level. There's a different way to approach it with Optimistic Locking which blocks at the operation level and avoids some of the problems with locking and bottlenecks of the Pessimistic approach. See this article for more details https://www.educative.io/answers/whats-the-difference-between-optimistic-and-pessimistic-locking.

Inconsistent Rollbacks

But there's more to the problem regardless of blocking, take this hypothetical example of an e-commerce platform:

try {

begin_transaction() // Database operation

update_inventory() // Database operation

charge_credit_card() // Non-database operation

update_order_status() // Database operation

commit_transaction() // Database operation

} catch (error) {

rollback_transaction() // Database operation

}

In this implementation, there's a non-database operation in the middle of the transaction (charge_credit_card). See how update_order_status depends on charge_credit_card. What happens if update_order_status fails? The transaction will rollback the database state, however, there's no rollback possible for charge_credit_card. This implementation can create an inconsistent state where the customer has been charged, while there's no trace in the database. This will need manual fixes and extra measures to detect.

Some redesign is needed...

In such cases, it's worth looking at the problem from a different angle. Is 100% consistency a strong requirement? Enter Eventual Consistency & Transactional Outboxes.

Transactional outboxes

The Transactional Outbox Pattern is a design approach aimed at achieving atomicity across multiple systems. This is done by grouping all intended operations —whether they are local database changes or external actions— into a single, atomic database transaction that writes to an "outbox" table. Once the transaction commits successfully, a separate processor reads from this outbox table and executes the external actions.

The role of eventual consistency becomes crucial here: if the outbox processor experiences a temporary failure, it can resume operations and ensure that all actions are eventually carried out. This may not occur instantaneously, but over time, all parts of the system will reflect a consistent state. This is the principle of eventual consistency. By adopting this model of consistency, the Transactional Outbox Pattern enables systems to extend the concept of atomicity beyond the confines of a single database transaction.

This is a simplified new implementation for the e-commerce example using a transactional outbox.

try {

begin_transaction() // Database operation

update_inventory(pending) // Database operation

add_to_outbox("charge_credit_card") // Database operation

add_to_outbox("update_order_status") // Database operation

commit_transaction() // Database operation

} catch(error){

rollback_transaction() // Database operation

}

// Separate process

process_outbox() {

foreach operation in pending_operations_fifo {

if (operation is charge_credit_card) {

charge_credit_card()

}

if (operation is update_order_status) {

update_order_status(completed)

}

}

}

The processing of the outbox can be done in a variety of ways, each with its unique benefits. It can join dependent operations and order them in time to ensure they are processed in the required order. Or it can just parallelize operations to maximize performance. One important detail not to miss is to implement a retry mechanism to provide a reliable way to complete operations. Another operational advantage of retries is the ability to send errored operations to a DeadLetterQueue for manual inspection, offering an additional level of control and visibility.

Conclusion

Performing non-database operations in the middle of transactions can have negative consequences. It is crucial to avoid such practices as they can potentially lead to severe data inconsistencies, significant performance degradation, and a wide range of errors that can impact the overall system stability and reliability. Ensuring operational transactionality between different systems is a complex and challenging task that requires careful consideration of various factors, including data synchronization, transaction coordination, and error-handling mechanisms. However, there are several powerful tools and techniques available in the industry such as Transactional Outbox that can effectively address these challenges without raising the complexity bar too much. Something to keep in mind when looking at transactional code!